© Borgis - New Medicine 2/2013, s. 55-61

*Eszter Borján1, Zoltán Balogh1, Judit Mészáros2

Evaluating the effectiveness of two simulation courses for midwifery students

1Department of Nursing, Faculty of Health Sciences, Semmelweis University, Budapest, Hungary

Head of Department: Zoltán Balogh, PhD

2Faculty of Health Sciences, Semmelweis University, Budapest, Hungary

Dean of the Faculty of Health Sciences: Prof. Judit Mészáros, PhD

Summary

Aim. The aim of this study was to evaluate the effectiveness of two simulation courses for midwifery students.

Material and methods. There were 30 midwifery students enrolled in the study at Semmelweis University, the Faculty of Health Sciences between February and May 2011. The descriptive study examined the effectiveness of a common compulsory course: “Clinical simulation” and a special course following it: “Case studies in simulation” using the METI Simulation Effectiveness Tool (SET). Students completed the SET after the common compulsory course and also after the special course, by rating its statements. Results were compared by the authors.

Results. The common compulsory course: “Clinical simulation” was effective. After the special course: “Case studies in simulation” we realized remarkable improvement in assessment skills, in the skill of critical thinking and self-confidence.

Conclusion. METI SET is a useful tool for evaluating our students’ perception; however, more objective assessment tools should be used for the evaluation of our students’ development in simulation.

INTRODUCTION

The worldwide use of high-fidelity human patient simulators in nursing and midwifery education programs has increased in the past ten years. Universities are faced with increased student intakes, decreased clinical placements and a shortage of patient availability (1, 2). Nevertheless students require innovative and successful learning strategies in order to be prepared for real clinical practice in a more effective way. Simulation is an efficient method because it provides multiple learning objectives in a realistic clinical environment without harming patients (1).

We started to integrate simulation into the curriculum in 2008 when we received our first METI (Medical Education Technologies, Inc.) Emergency Care Simulator (ECS). Since 2011 we have also obtained a METIman Nursing and a METI Baby Sim.

The curriculum development has been a four-year long process we have not finished yet. Simulation has been integrated into the curriculum for all undergraduate students. Each of our students has the opportunity to practice basic assessment and technical skills with a simulator. The name of the common compulsory course is: “Clinical simulation”. This course includes elementary level scenarios for all students in different fields of health care. The prerequisite of this course is to cover the subject: “Basics of Health Sciences” which provides general knowledge and practice in the fields of nursing (3). After completing the “Clinical simulation” course the nursing and midwifery students have more possibilities to practice on the simulator by using the METI PNCI (Program for Nursing Curriculum Integration) learning package (4). We have developed two special programs for nursing and midwifery students. The name of this special course is: “Case studies in simulation”. Although we don’t have a special birth simulator yet we can use the METI ECS and METIman Nursing simulators for midwifery students as well. As we were planning the simulation program for midwifery students we chose the most appropriate scenarios (simulated clinical experiences – SCEs) from the METI PNCI learning package considering the midwife’s role in different clinical fields (tab. 1). The prerequisite of this course is to cover the subject: “Basics of Nursing” which includes the basic knowledge and skills for nursing and midwifery students.

Table 1. Scenarios from the METI PNCI learning package.

| Title of the scenario | Reasons for the choice of scenarios |

| Hyperemesis Gravidarum | obstetric scenario |

| Pregnancy Induced Hypertension | obstetric scenario |

| Postpartum Hemorrhage | obstetric scenario |

| Amniotic Fluid Embolism | obstetric scenario |

| Postop Ileus | common complication after operation |

| Postop DVT | common complication after birth or operation |

While using human patient simulators we have experienced most of the advantages of this new teaching and learning strategy but we have also realized that we have to measure the effectiveness of our work in order to ensure the best way of teaching our students. We can find many evaluation instruments in literature but in most cases their validity and reliability is unknown. Further use and development of simulation evaluation instruments are of highest importance (5).

AIM

The first aim of this study was to evaluate the effectiveness of the special simulation course for midwifery students by comparing the results measured after a common compulsory course: “Clinical simulation” with the ones measured after the special course: “Case studies in simulation”. The second aim of this study was to analyse the fields of students’ development after the two courses. For the comparison we used the METI Simulation Effectiveness Tool (SET).

The research questions of this study were as follows:

1. Was the common compulsory “Clinical simulation” course effective?

2. Did we experience any improvement after the special course?

3. Can we improve all fields of knowledge and all skills during one course?

MATERIAL AND METHODS

This descriptive study examined the effectiveness of the two courses by using the METI Simulation Effectiveness Tool (SET)*. This multi-item tool is a valid and reliable instrument developed by the experts of METI including 13 statements and measuring three aspects of learning outcome: skills or knowledge gained as a result of the simulated cases, confidence level and satisfaction attitudes. The 13 statements are shown in table 2.

Table 2. Evaluation of the two courses by midwifery students.

| Statements of METI SET | After the common compulsory course: “Clinical simulation” | After the special course: “Case studies in simulation” |

| N = 30 = 100% | Do Not Agree | Somewhat Agree | Strongly Agree | Not Applicable | Do Not Agree | Somewhat Agree | Strongly Agree | Not Applicable |

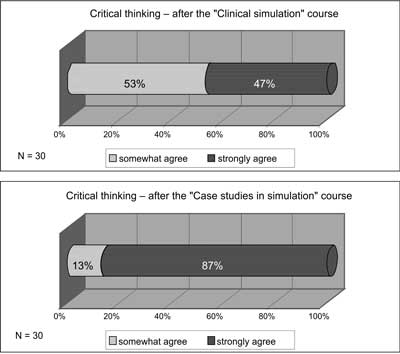

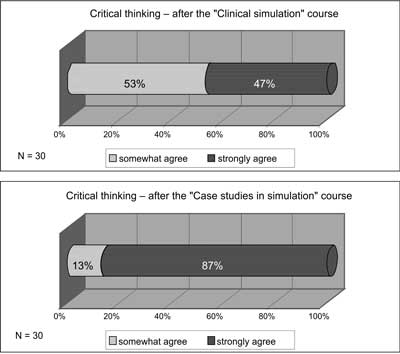

| 1. The instructor’s questions helped me to think critically | | 53% | 47% | | | 13% | 87% | |

| 2. I feel better prepared to care for real patients | | 33% | 60% | 7% | | 33% | 64% | 3% |

| 3. I developed a better understanding of the pathophysiology of the conditions in the SCE | | 33% | 67% | | | 40% | 60% | |

| 4. I developed a better understanding of the medications that were in the SCE | 16% | 70% | 14% | | 13% | 74% | 13% | |

| 5. I feel more confident in my decision making skills | 10% | 67% | 23% | | 3% | 67% | 30% | |

| 6. I am more confident in determing what to tell the healthcare provider | 6% | 47% | 37% | 10% | 10% | 40% | 47% | 3% |

| 7. My assessment skills improved | 6% | 37% | 57% | | | 16% | 84% | |

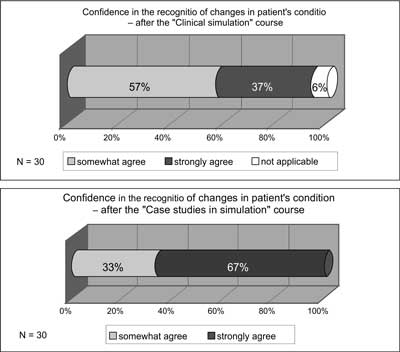

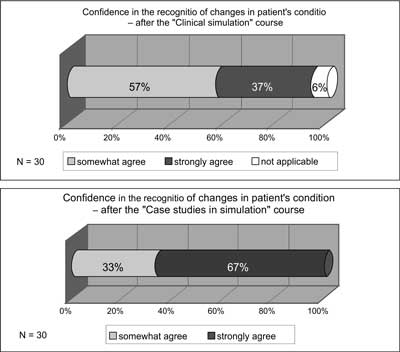

| 8. I feel more confident that I will be able to recognize changes in my real patient’s condition | | 57% | 37% | 6% | | 33% | 67% | |

| 9. I am able to better predict what changes may occur with my real patient | 13% | 53% | 24% | 10% | 3% | 70% | 27% | |

| 10. Completing the SCE helped me understand classroom information better | | 30% | 70% | | | 27% | 73% | |

| 11. I was challenged in my thinking and decision making skills | | 54% | 40% | 6% | 3% | 40% | 57% | |

| 12. I learned as much from observing peers as I did when I was actively involved in caring for the simulated patient | 23% | 57% | 14% | 6% | 13% | 70% | 14% | 3% |

| 13. Debriefing and group discussion were valuable | | 13% | 76% | 10% | | 16% | 80% | 3% |

There were 30 midwifery students enrolled in the study at Semmelweis University, the Faculty of Health Sciences between February and May 2011. All of them were before the real clinical practice, at “Novice” level. Students completed the SET after the common compulsory course: “Clinical simulation” by rating the statements of the tool. The same students completed the SET again after the special course: “Case studies in simulation”. Results of the two courses were compared by the authors.

The data analysis was performed by using the statistical program SPSS for Windows version 15.0.

RESULTS

Research Question 1: Was the common compulsory “Clinical simulation” course effective?

Students evaluated the 13 statements of METI SET after the common compulsory course. High percentage of students agreed somewhat or strongly with all of the statements (tab. 2). The most remarkable results are: Statement 2: “I feel better prepared to care for real patients”, 60% of the students strongly agreed. Statement 3: “I developed a better understanding of the pathophysiology of the conditions in the SCE”, 67% of participants strongly agreed. Statement 7: “My assessment skills improved”, 57% of students strongly agreed. 70% of participants strongly agreed with Statement 10: “Completing the SCE helped me understand classroom information better.” 76% of the students strongly agreed with Statement 13: “Debriefing and group discussion were valuable.”

Research Question 2: Did we experience any improvement after the special course?

Students evaluated the statements of METI SET after the special course again. We supposed that we would get better results after the course: “Case studies in simulation” compared with the results of the common compulsory course: “Clinical simulation” at some aspects of the examined fields (tab. 2).

At statement 1: “The instructor’s questions helped me to think critically” the difference was remarkable; after the common compulsory course 47% of students strongly agreed with this statement and after the special course 87% of students strongly agreed with this statement (fig. 1). At statement 5: “I feel more confident in my decision making skills” we have got a little bit better results after the special course (30% of students strongly agreed) but the difference is not remarkable compared with the results after the common compulsory course (23% of students strongly agreed). At statement 6: “I am more confident in determining what to tell the healthcare provider” we recorded some improvement, 37% of students strongly agreed with this statement after the common compulsory course and 47% of them did so after the special course. At statement 7: “My assessment skills improved” the difference was remarkable. After the common compulsory course 57% of students strongly agreed with this statement while their number increased to 84% after the special course (fig. 2). At statement 8: “I feel more confident that I will be able to recognize changes in my real patient’s condition” the difference was also impressive; the number of participants who strongly agreed with this statement was 37% after the common compulsory course and this proportion was 67% after the special course (fig. 3). At statement 9: “I am able to better predict what changes may occur with my real patient” we didn’t realize too big difference; 53% of participants somewhat agreed after the common compulsory course and 70% of them somewhat agreed after the special course. At statement 11: “I was challenged in my thinking and decision making skills” we have got a little bit better results after the special course; 40% of students agreed strongly with the statement after the common compulsory course and 57% of them after the special course. At statement 12: “I learned as much from observing peers as I did when I was actively involved in caring for the simulated patient” we realized some improvement; after the common compulsory course 57% of participants somewhat agreed while, after the special course, 70% of them somewhat agreed with the statement.

Fig. 1. Distribution of students’ responses to critical thinking skill.

At statements 2, 3, 4, 10, 13 there were no remarkable differences in the results after the common compulsory course and after the special course (tab. 2).

Research Question 3. Can we improve all fields of knowledge and all skills during one course?

We realized some improvement after the special course in the field of critical thinking, self- confidence and assessment skills. Our results show that it is impossible to improve all fields of knowledge and skills during one course.

DISCUSSION

By using the METI Simulation Effectiveness Tool (SET) for the evaluation of the results after the two courses we realized improvement at some aspects of the examined fields. This tool is a multi-item instrument designed to measure simulation effectiveness and can show how effective the simulated learning experience is in meeting the students’ learning needs. Its development was linked to the creation of the Medical Education Technologies Incorporated (METI) standardized simulation program for nursing education, entitled Program for Nursing Curriculum Integration (PNCI). The PNCI package includes more than 90 evidence-based simulated clinical experiences (SCEs) in different fields of nursing. The validity and reliability of the METI SET were investigated. The total Cronbach’s alpha reliability of this tool was 0.93 (6).

One of the most remarkable differences between the two courses was at statement 1: “The instructor’s questions helped me to think critically” (fig. 1). Although the results have come from the students’ self-reported data, we can evaluate this as a successful learning outcome. Critical thinking skills are more important than ever before, because nurses deal with critically ill patients, use advanced technology, and cope with a continually changing knowledge base (7). Kaddoura reported that the participants of their study developed in critical thinking skills during the simulation and the clinical simulation increased their confidence in dealing with critical situations (8). Promoting critical thinking skills is a key component of nursing education (8, 9).

During our common compulsory course: “Clinical simulation” students learn at a basic level and our main goal was to improve their assessment skills. During the special course: “Case studies in simulation” our most important goal was to promote their assessment and critical thinking skills as well. We can also evaluate it as a good result that after the special course most of the students felt that their assessment skills had improved (fig. 2).

Fig. 2. Distribution of students’ responses to assessment skills.

The Dreyfus model posits that, in the acquisition and development of a skill, students pass through five levels of proficiency: novice, advanced beginner, competent, proficient and expert. Patricia Benner started to apply the Dreyfus model to nursing education and created Benner’s stages of clinical competence (10, 11). Benner’s theory about the nurses’ stages of learning fits simulation learning well because simulation assists students in advancing from novice level to advanced beginner level of competency within the safety of a lab setting (2).

“Novice” level means: students have had no experience of the situations in which they are expected to perform. Novices are taught rules to help them perform. They have no real experience in the applications of rules. “Novice” might be characterized with the following sentence: “Just tell me what I need to do and I will do it” (tab. 3) (10, 11).

Table 3. Characteristics of the “Novice” level by Patricia Benner (10).

Beginners with no experience of the situations in which they are expected to perform

To give them insight into those situations and allow them to gain experience

Rules are: context-free, independent of specific cases, and applied universally

The rule-governed behavior is extremely limited and inflexible

Example: “Tell me what I need to do and I’ll do it.” |

When analyzing our results we have to take into consideration that our students after the common compulsory course and after the special course are at the “Novice” stage, all of them were before the real clinical practice. At “Novice” level the most important skill is assessment during simulation. At statement 8: “I feel more confident that I will be able to recognize changes in my real patient’s condition” the difference was remarkable (fig. 3). At statement 9: “I am able to better predict what changes may occur with my real patient” we realized some difference (tab. 2). The reason of this improvement might be that the improvement of assessment skills helped the students to become more confident during the simulation. “Confidence is a belief in one’s own abilities to successfully perform a behavior” (12). Studies have shown that simulation can equip students with skills that can be directly transferred into the clinical practice leading to increased self-confidence (13).

Fig. 3. Distribution of students’ responses to confidence in the recognition of changes in patient’s condition.

At statement 5: “I feel more confident in my decision making skills” we have got a little bit better results after the special course but the difference was not remarkable as compared with the results after the common compulsory course (tab. 2). Decision making skill is also a very important skill that we can improve during simulation, but at “Novice” level it is very difficult for students. At “Advanced beginner” level this skill might show more improvement than at the “Novice” level.

We didn’t realize any improvement at statement 2: “I feel better prepared to care for real patients” (tab. 2). The reason for the result is that after these two courses students were before clinical practice so they did not have any experience with real patients.

Debriefing takes place at the end of simulation. Debriefing is a guided discussion method which allows students to link theory to practice, think critically. The group discusses the process, outcome, and application of the scenario to clinical practice and reviews relevant teaching points (13). Our results show that debriefing and group discussion were valuable (statement 13) both after the common compulsory course and after the special course (tab. 2). Some research reported that debriefing can support the development of critical thinking skill (13). We think that every occasion of simulation can somehow improve our students’ knowledge and skills.

LIMITATIONS OF THE STUDY

One limitation of this study was the small sample size. This sample size (N=30) might not allow generalization of findings to the midwifery student population. An additional limitation was that the METI Simulation Effectiveness Tool (SET) – used students’ self-reported data; participants were both the raters and the rated persons of their simulation effectiveness. It is recommended to use more objective assessment tools for the better evaluation of clinical simulation (6).

CONCLUSIONS

Our results show remarkable improvement in assessment skills, in critical thinking skill and in self-confidence after the special course for midwifery students. We also realized that it is impossible to improve all fields of knowledge and all skills during one course. At the beginning of the course we have to determine our goals and the fields we want to improve. We have to take the level of our students’ knowledge into consideration. METI SET can be useful for continuous assessment and the evaluation of our students’ perception; however, we have to use more objective assessment tools for evaluation. In order to make evaluation more objective we are going to use video recording to analyse our students’ improvement during simulation.

The curriculum development has not finished yet. We should reevaluate our curriculum and the placement of simulation courses in it. Our results and our experience show that we should provide simulation lessons at “Advanced beginner” level in order to improve more skills (e.g. decision making skill) and help to achieve higher level of competencies.

*With the permission of CAE Healthcare/METI

Piśmiennictwo

1. Wilford A, Doyle TJ: Integrating simulation training into the nursing curriculum. Br J Nurs 2006; 15(11): 604-607. 2. Brown D, Chronister C: The effect of simulation learning on critical thinking and self-confidence when incorporated into an electrocardiogram nursing course. Clin Simulation Nurs 2009; 5(1): e45-e52. 3. Csóka M, Vingender I: A szimulátoros oktatás módszertana. Nővér 2010; 23(6): 22-39. 4. Borján E, Balogh Z, Mészáros J: Three-year teaching experience in simulation education. New Medicine 2011; 15(4): 138-142. 5. Kardong-Edgren S, Adamson KA, Fitzgerald C: A Review of Currently Published Evaluation Instruments for Human Patient Simulation. Clin Simulation Nurs 2010; 6(1): e25-e35. 6. Elfrink Cordi VL, Leighton K, Ryan-Wenger N et al.: History and Development of the Simulation Effectiveness Tool (SET). Clin Simulation Nurs 2012; 8(6): e199-e210. 7. Smith-Blair N, Neighbors M: Use of the critical thinking disposition inventory in critical care orientation. J Contin Educ Nurs 2000; 31(6): 251-256. 8. Kaddoura MA: New graduate nurses’ perceptions of the effects of clinical simulation on their critical thinking, learning, and confidence. J Contin Educ Nurs 2010; 41(11): 506-516. 9. Ravert P: Patient simulator sessions and critical thinking. J Nurs Educ 2008; 47(12): 557-562. 10. Benner P: From Novice to Expert. Excellence and Power in Clinical Nursing Practice, Addison-Wesley Publishing Company, Inc. 1984. 11. Tulkán I: Ápolói kompetenciák mérése különös tekintettel a területi gyakorlatokra. PhD értekezés, Semmelweis Egyetem 2010. 12. Brannan JD, White A, Bezanson JL: Simulator effects on cognitive skills and confidence levels. J Nurs Educ 2008; 47(11): 495-500. 13. Jeffries PR: A framework for designing, implementing, and evaluating simulations used as teaching strategies in nursing. Nurs Educ Perspect 2005; 26: 96-103.